Building UX-Aware Agents with Prompt Flow and Azure APIs

- Nicole Mocskonyi

- May 26, 2025

- 5 min read

Artificial intelligence agents are evolving rapidly. What began as simple, rule-based bots has matured into a new generation of intelligent systems that understand natural language and adapt to context, workflows, and user behavior. These are UX-aware agents—AI systems that integrate deeply with user intent and business logic to deliver smarter, more relevant outcomes.

In this blog, Cyann experts share insights into how developers, technical teams, and IT leaders can use Azure Prompt Flow and Azure APIs to design and deploy these contextual, intelligent agents. The goal is to help teams go beyond static automation and unlock richer, more responsive user experiences across enterprise environments.

Why UX Matters in AI Agents?

Traditional bots, though useful, come with significant limitations. They are largely transactional and operate on fixed triggers, often producing rigid responses that fail to reflect the intent or emotional tone of the user. These systems typically do not retain context, making them less helpful in complex workflows.

In contrast, UX-aware agents prioritize interaction design and contextual awareness. They understand the user’s goal, adapt responses dynamically, and collaborate with business systems to complete meaningful tasks. For organizations, this shift results in:

Faster, more intuitive digital workflows

Reduced dependency on manual data lookup

Fewer user errors and escalations

Enhanced employee or customer satisfaction

By focusing on user experience, developers can transform AI from a transactional tool into a collaborative digital assistant.

Key Components of a UX-Aware Agent

Building an intelligent, contextual agent on Azure involves assembling several core technologies, each responsible for a part of the end-to-end logic:

Azure Prompt Flow

Prompt Flow provides a visual and code-first interface to orchestrate the agent's steps. Developers can chain together LLM prompts, Python tools, and API calls in a flow-based format, making workflows easier to debug, scale, and iterate.

Azure APIs

These enable the agent to interact with real-time business systems—CRM tools, analytics dashboards, databases, or SaaS platforms. The APIs deliver the data the LLM uses to provide accurate, personalized answers.

Large Language Models (LLMs)

LLMs like GPT models power the natural language understanding and generation. In Prompt Flow, prompts and context are passed to these models to handle questions, summaries, and reasoning.

Custom Tools

Developers can write Python functions to process data, invoke custom logic, or transform inputs/outputs between API calls and LLMs. This modular architecture provides both flexibility and control, making it easier to adapt the agent to evolving business needs.

Component | Role |

Azure Prompt Flow | Visually orchestrate LLMs, prompts, APIs, and logic as a modular flow |

Azure APIs | Access business systems, databases, and external services |

Large Language Models (LLMs) | Understand natural language, generate human-like responses |

Custom Tools | Execute Python scripts, transform data, and make API calls |

Recommended Read: Not All Copilots Are Created Equal: Why Purpose-Built AI Agents Win

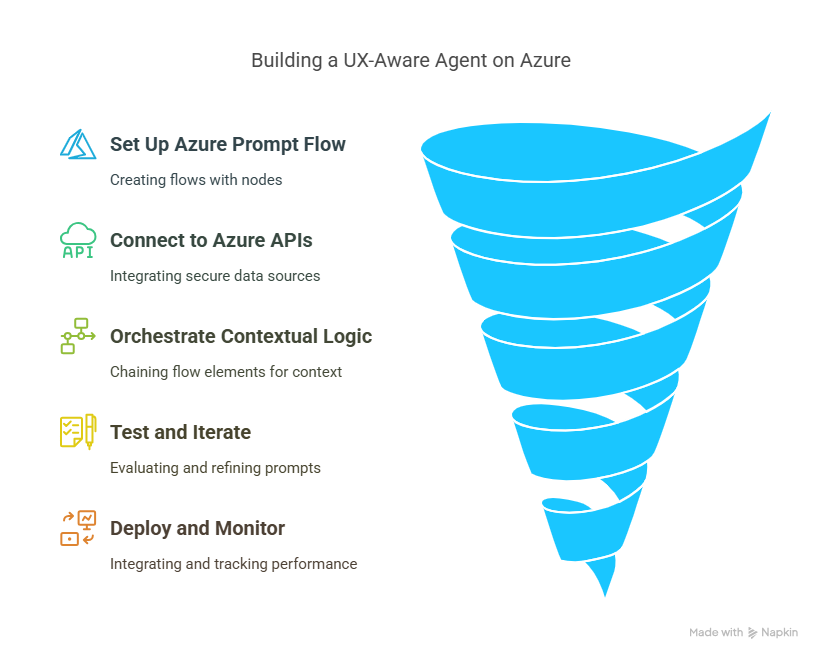

How to Build a UX-Aware Agent on Azure

The following step-by-step guide outlines the approach to building and deploying UX-aware agents using Azure Prompt Flow and Azure APIs.

1. Define the User Experience

The development process should begin by identifying specific use cases your agent will address. These might include:

“Show me today’s top five opportunities by region”

“Summarize unresolved support tickets from this week”

“Create a task and assign it to a project team”

Each of these prompts should be mapped to a workflow that includes:

Extracting intent using the LLM

Calling relevant APIs or executing logic

Transforming and returning results in a readable format

Aligning the agent’s design with real user behavior ensures relevance and usability from day one.

2. Set Up Azure Prompt Flow

Start by creating a new project in Azure AI Studio. The studio provides two main ways to build flows: using a drag-and-drop interface or by authoring YAML files.

Each flow is composed of nodes:

Prompt nodes: Interact with the LLM to interpret or generate language

Tool nodes: Execute Python logic or perform data transformation

API nodes: Connect to external services or data sources

Organize these nodes to reflect the sequence of tasks the agent will execute. For example, a query-to-summary task might involve: prompt → API call → transformation → LLM summary.

3. Connect to Azure APIs

To retrieve or submit data, the agent must connect to back-end systems via secure APIs. Azure supports:

REST APIs for custom apps or third-party services

Azure SQL or Cosmos DB for structured data

Azure Cognitive Search for indexing documents or knowledge bases

API tools can be defined in YAML and reused across flows.

Use YAML to define your API connectors:

yaml

tools:

- name: SalesAPI

type: api

endpoint: https://api.company.com/sales

It is important to follow best practices for security, such as:

Storing API keys and secrets in Azure Key Vault

Using managed identities to avoid hardcoded credentials

Applying role-based access control (RBAC) where applicable

This enables your agent to retrieve accurate, real-time data without compromising system integrity.

4. Orchestrate Contextual Logic

UX-aware behavior is achieved by chaining flow elements that can retain and use context across multiple steps.

For instance:

The user asks for a filtered report.

The LLM parses the request and extracts criteria.

A Python tool formats these into API parameters.

The API node fetches results.

A final LLM node summarizes the results in natural language.

This chaining process helps the agent move beyond query execution into active interpretation and decision-making—key characteristics of a UX-aware agent.

5. Test and Iterate

Azure Prompt Flow provides tools to evaluate prompt performance. Developers can simulate real-world inputs, compare prompt variants, and identify failure points before deployment.

Good practices include:

Testing with edge-case data and ambiguous phrasing

Reviewing LLM outputs for accuracy and tone

Iterating based on user feedback or business metrics

Prompt tuning and testing is an ongoing task, especially as use cases and user expectations evolve.

6. Deploy and Monitor the Agent

Once the agent is ready, you can deploy it as an Azure AI endpoint. These endpoints can be integrated into web applications, mobile apps, chat interfaces, or internal tools.

For ongoing operations, Azure provides monitoring tools:

Azure Monitor for system-level metrics and errors

Application Insights for user behavior tracking

Logging tools to capture usage patterns and exceptions

This observability ensures the agent can be refined over time and remain aligned with user needs.

Recommended Read: Beyond ChatGPT: Implementing Custom Azure OpenAI Solutions for Enterprise Knowledge Management

Advanced Use Case: Retrieval-Augmented Generation (RAG)

Many enterprise use cases require grounding responses in internal documents or real-time business data. Retrieval-Augmented Generation (RAG) addresses this by combining LLM reasoning with contextual information from external sources.

Using Azure Cognitive Search and Prompt Flow, developers can build flows that:

Retrieve relevant documents based on a user query

Inject these into the prompt context

Generate personalized responses grounded in business facts

For example, an internal knowledge assistant could answer compliance-related questions by retrieving excerpts from policy documents and summarizing them.

RAG-enabled agents are beneficial for knowledge management, onboarding, policy interpretation, and customer support.

Conclusion

The transition from static bots to UX-aware agents represents a meaningful evolution in enterprise AI. These agents are not only smarter—they are also more relevant, more adaptable, and more aligned with the way users expect to interact with digital systems.

By leveraging Azure Prompt Flow for orchestration and Azure APIs for integration, organizations can move beyond command execution and start delivering true collaboration between users and AI.

Cyann helps businesses design, build, and scale these intelligent agents—bringing technical expertise, architectural guidance, and real-world experience in Azure development to every engagement.

How Cyann Can Help

At Cyann, we specialize in building AI-native systems that operate at enterprise scale. Our services include:

Strategic advisory on UX-aware agent design

Development of modular Prompt Flow solutions

Integration with Azure APIs, databases, and services

Deployment and monitoring using Azure best practices

Ongoing optimization, testing, and security management

If your organization is exploring how to build intelligent agents on Azure, Cyann can help accelerate your roadmap and reduce time to value.

To learn more or start a pilot, contact Cyann or explore Azure Prompt Flow documentation.

Comments